How we run our Breach Response testing, and why

In this blog post our CTO Stefan Dumitrascu explains some of the challenges behind our newly launched Breach Response testing, why things are now different (better) and the background on how we came to make some of our decisions.

One of our most exciting projects this year has been the Breach Response testing programme. In this article we explain what has changed since last year, and why.

Our work on these series of tests started a few years ago, with the first public reports coming out in 2019. But we always want to improve our testing in general. This year we focussed on incorporating the great work from the people over at MITRE Engenuity.

The Breach Response test’s latest methodology went through some changes to take into account the MITRE ATT&CK framework. We wanted to apply clear, compatible attack stages to our tests.

Detection vs. Protection

We now have the option to test using two different rating paradigms. These are Protection mode and Detection mode. If you care most about the insight an Endpoint Protection and Response (EDR) product gives during a breach, look for Detection mode reports. When you want to know what levels of protection a product affords, at any attack stage, you need Protection mode.

But now things have the potential to become confusing. Breach Response tests that are run at the same time, but against different products, could report different things. One will show how the product protects while another could assess how it detects breaches. A protection test published in 2020 Q2 would not be directly comparable to a detection test published at the same time.

And then we have the threats used. We didn’t always use the same in each time period for each test. SE Labs executes most of our tests privately and clients need us to behave as different groups of attackers for their varying purposes.

We needed a way to clearly identify the groups of threats we were using. Cue the Breach Response Threat Series (BRTS).

Our breach response testing attack stages are compatible with the MITRE ATT&CK framework

(Threat) actor line-up

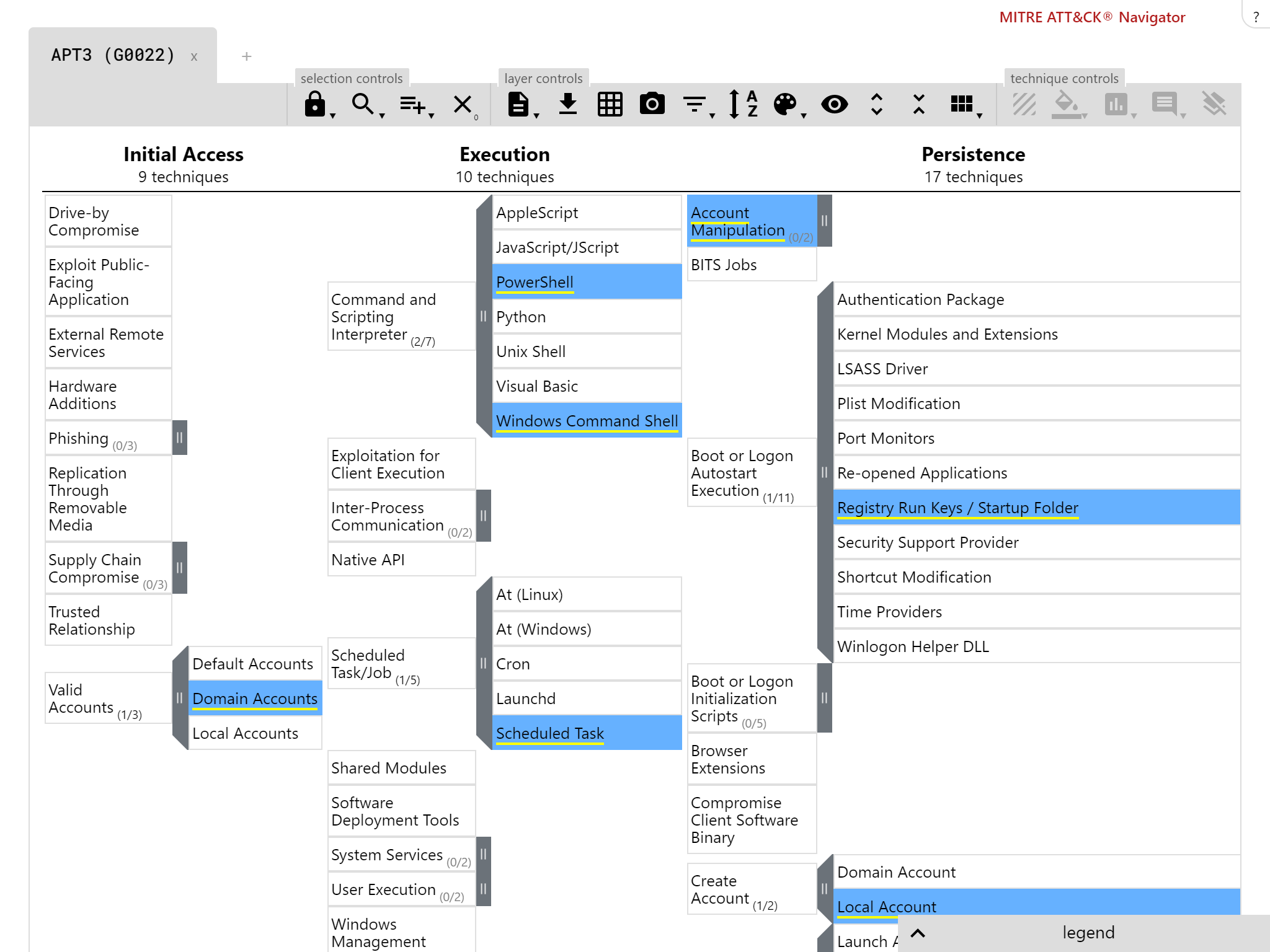

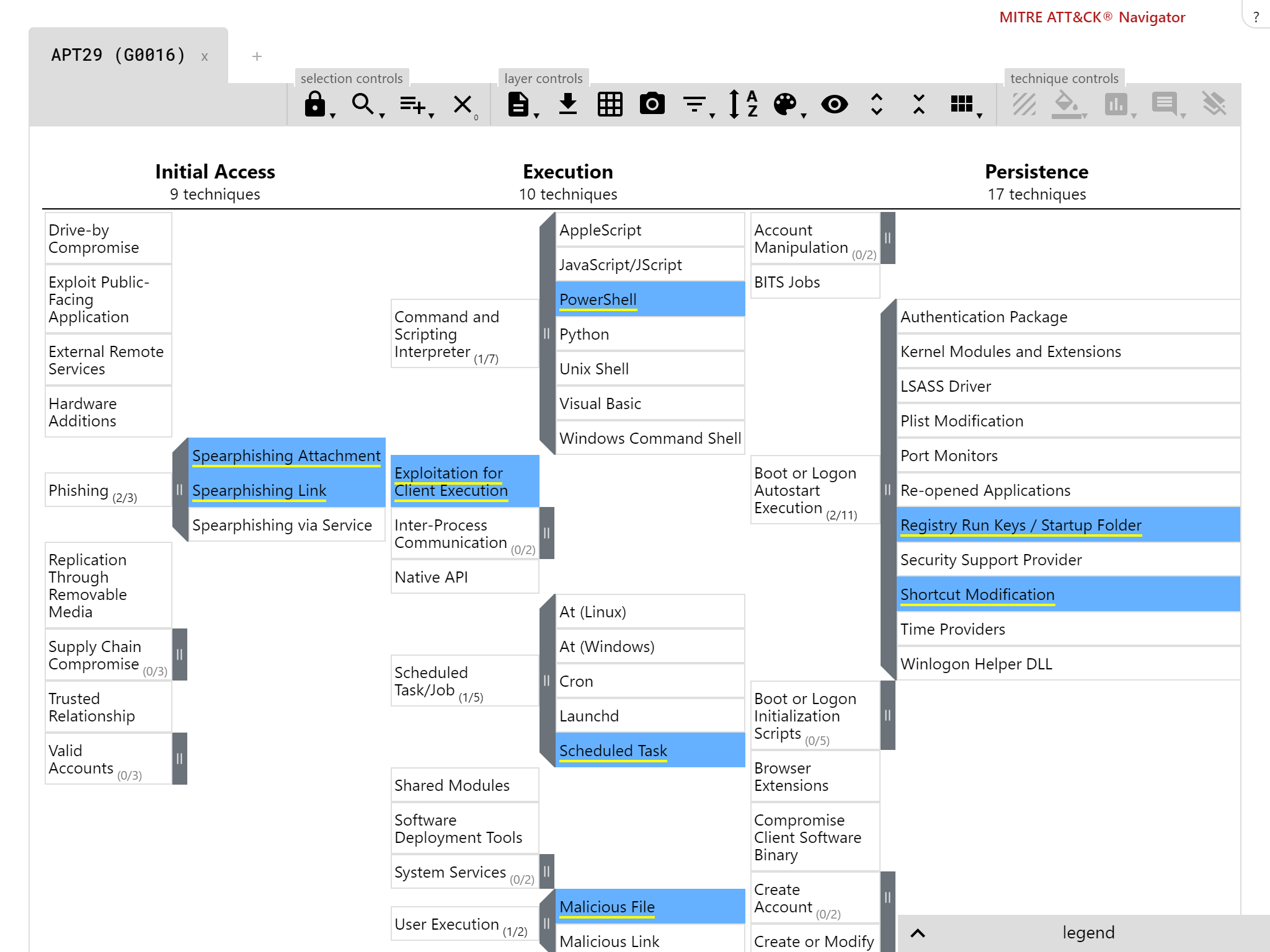

With the new changes in mind, we aimed to launch a set of quarterly breach response reports. Following popular demand, we tackled the main APT groups used in ATT&CK evaluations. This initial ones were APT3 and APT29.

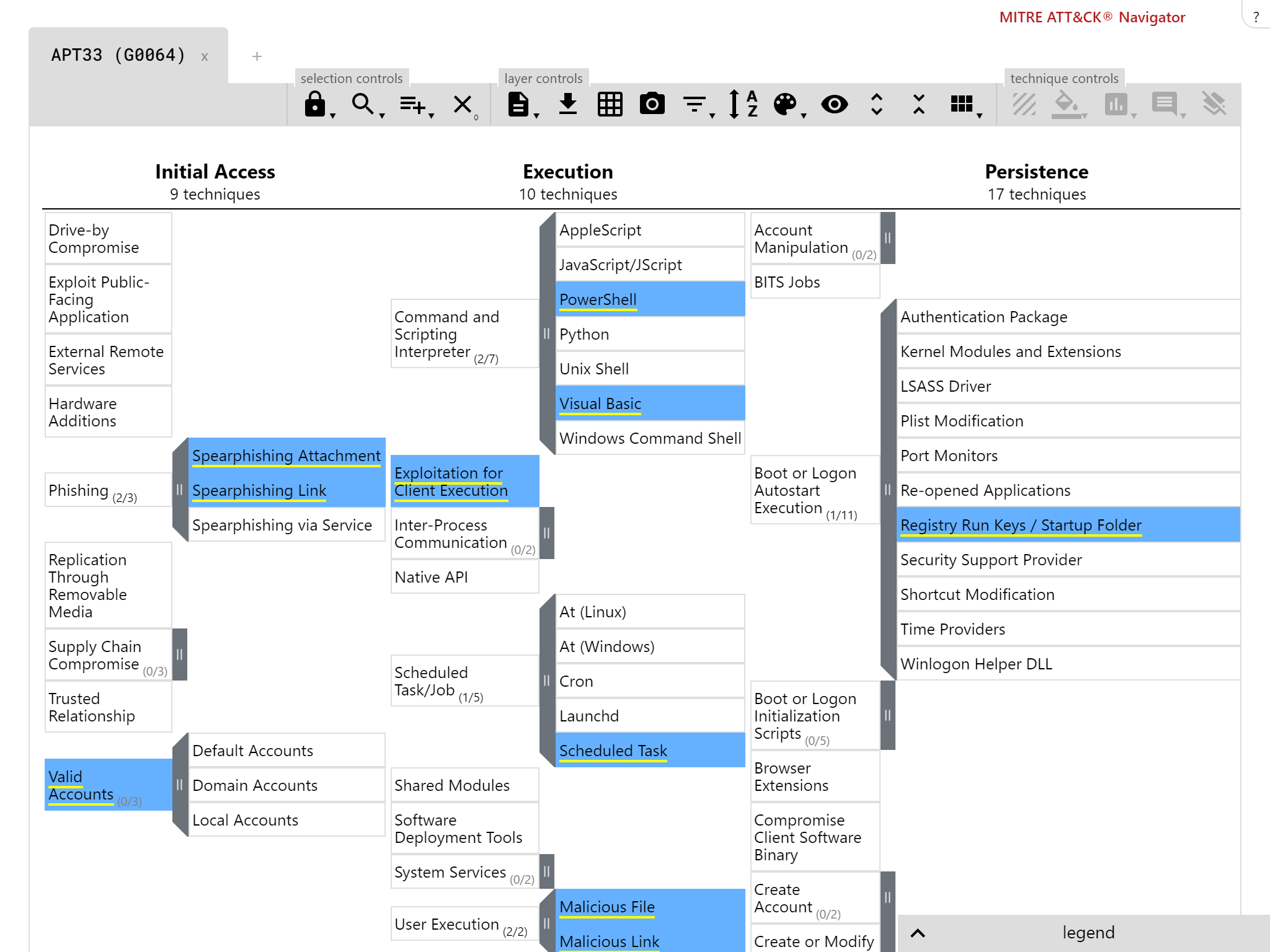

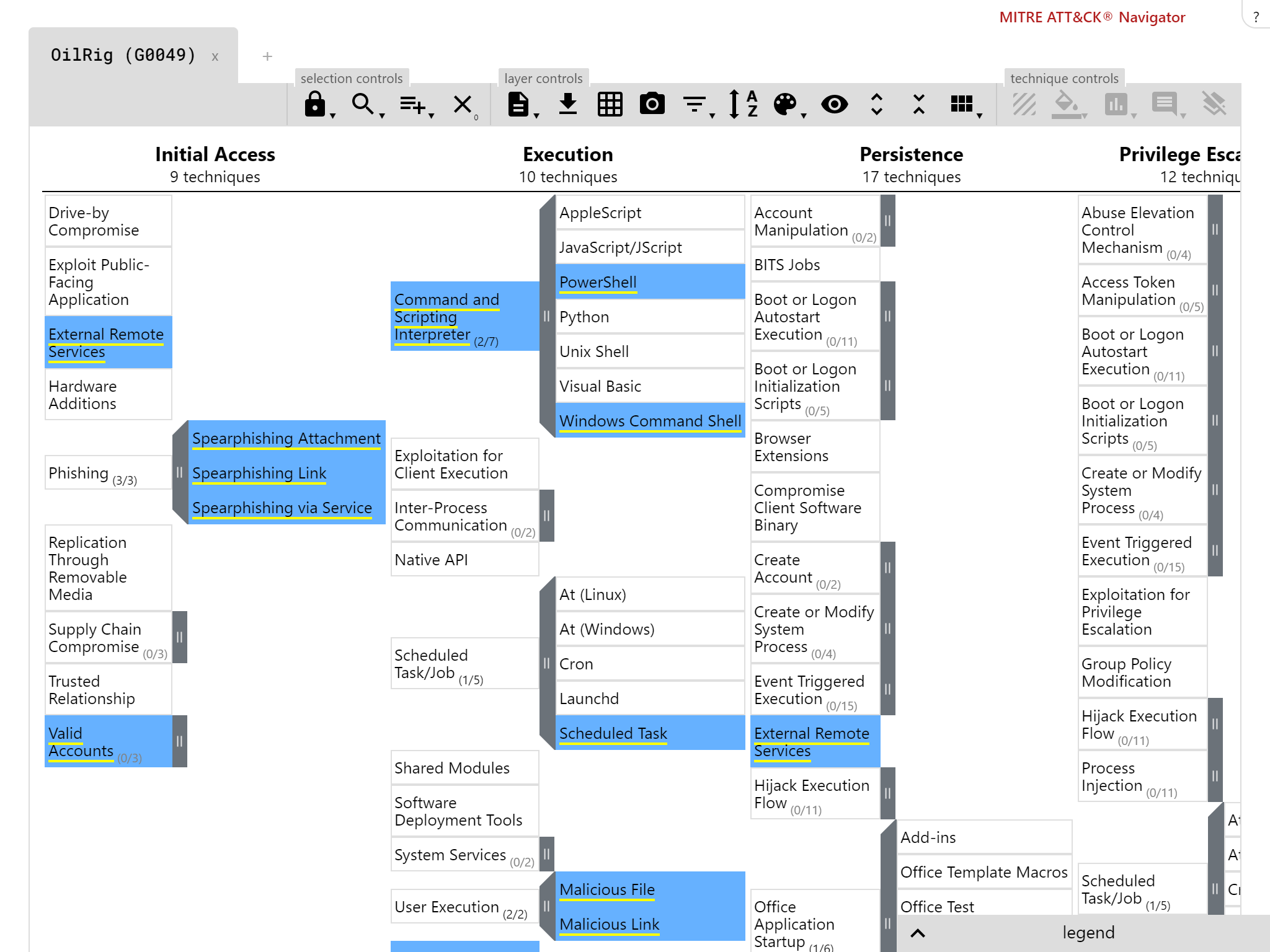

We wanted a wider breadth of threats so decided to add two groups (APT33 and APT34) to the initial pack. BR Threat Series 1 (BRTS1) was born.

BRTS1: Attack groups used in current MITRE ATT&CK evaluations and that use public tools

Background: APT3, APT29

Our clients most frequently requested testing with the same threats as those used in the initial two ATT&CK evaluations. It was a no brainer for us to include these. The APT29 actors in particular are exciting (to us as testers) for the future, as they recently re-appeared to target organisations working on Coronavirus vaccines. The techniques we saw were already known to us, which is not surprising. We don’t expect massive innovation when existing hacking techniques work well.

Background: APT33, APT34 (Oilrig)

APT33 was interesting to us, particularly because this threat actor had made use of publicly available tools. We know this from research by the great teams over at FireEye and others. As we mentioned in our previous posts about testing like hackers, this adds credence to our push for the use of publicly-available tools as much as possible in our tests.

Oilrig, on the other hand, was interesting due to its use of malicious CHM files to initiate files, alongside its focus on using PowerShell. We were curious to see the coverage for these attacks by the vendors we were testing publicly and privately, as there haven’t been extensive public reports on this.

A public report featuring this series, where we tested Crowdstrike Falcon in Detection mode, can be found here.

BRTS2: Attack groups focused on financial gain

Background: FIN7/ Carbanak

The threats of choice for the MITRE ATT&CK evaluations in 2020, choosing these was a no-brainer for us. Once again, we had a lot of requests from our clients for this. It was a great opportunity to start incorporating Linux-based test cases, too.

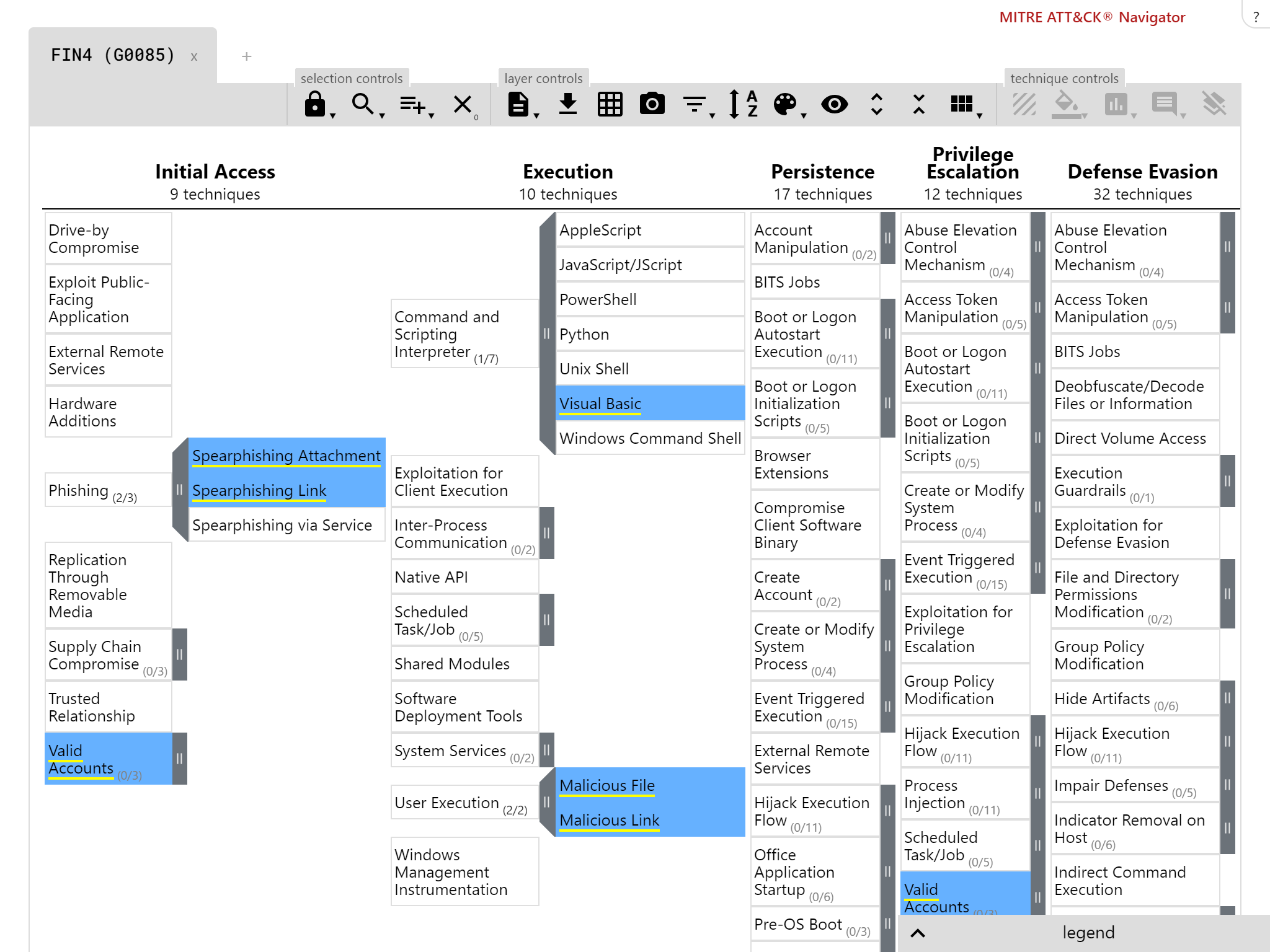

Background: FIN4

One of the additions we had wanted to make to our Breach Response testing for ages was looking at post-compromise scenarios. What happens when the attacker has already established a foothold in your environment, and you deploy a new security product?

FIN4 operated by collecting legitimate documents, weaponising them, then sending them to targets. The idea was to make their attacks more convincing. The documents were stolen from the target, or a contact of the target. We worked on the premise that a compromise had already been accomplished.

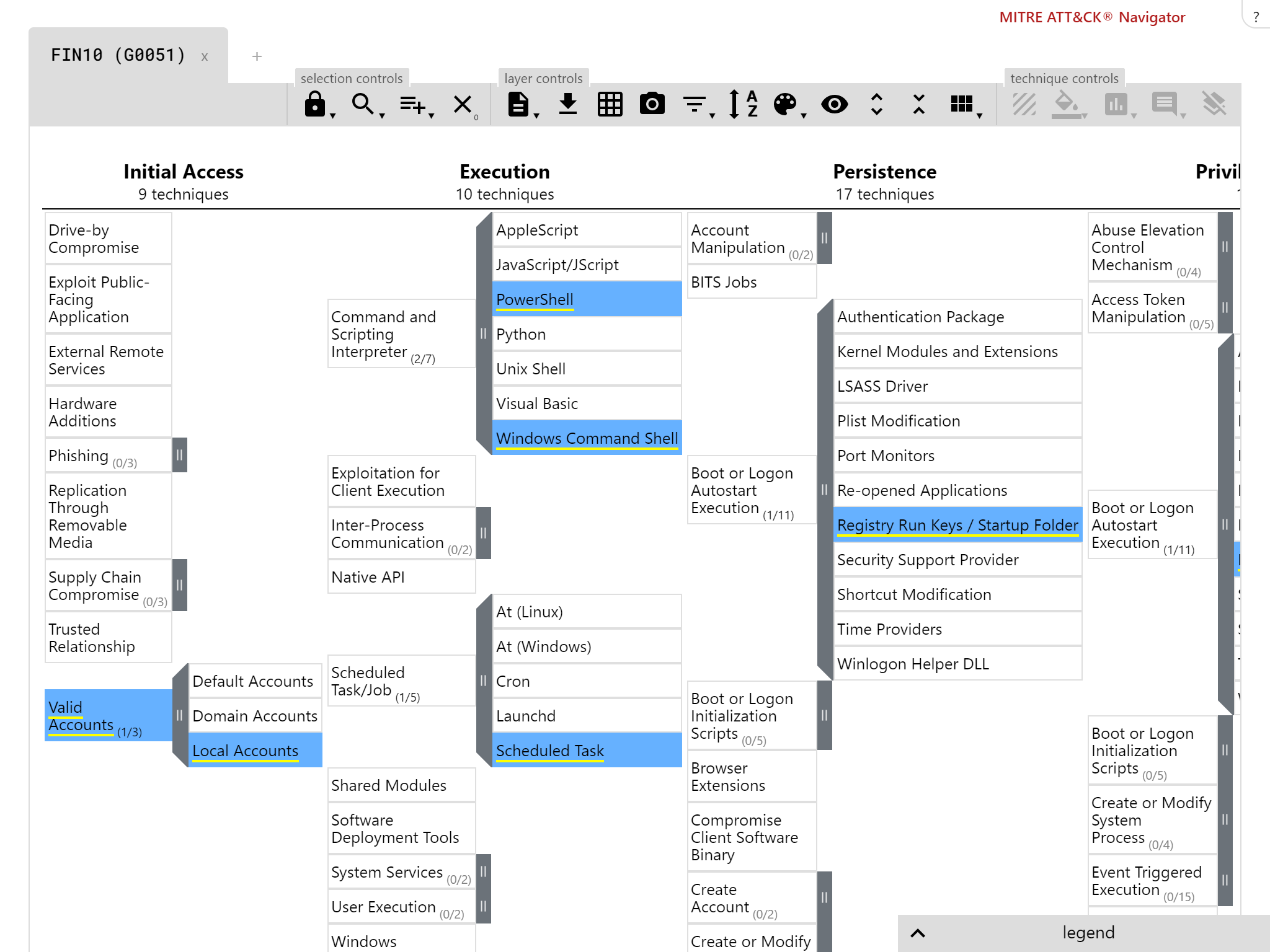

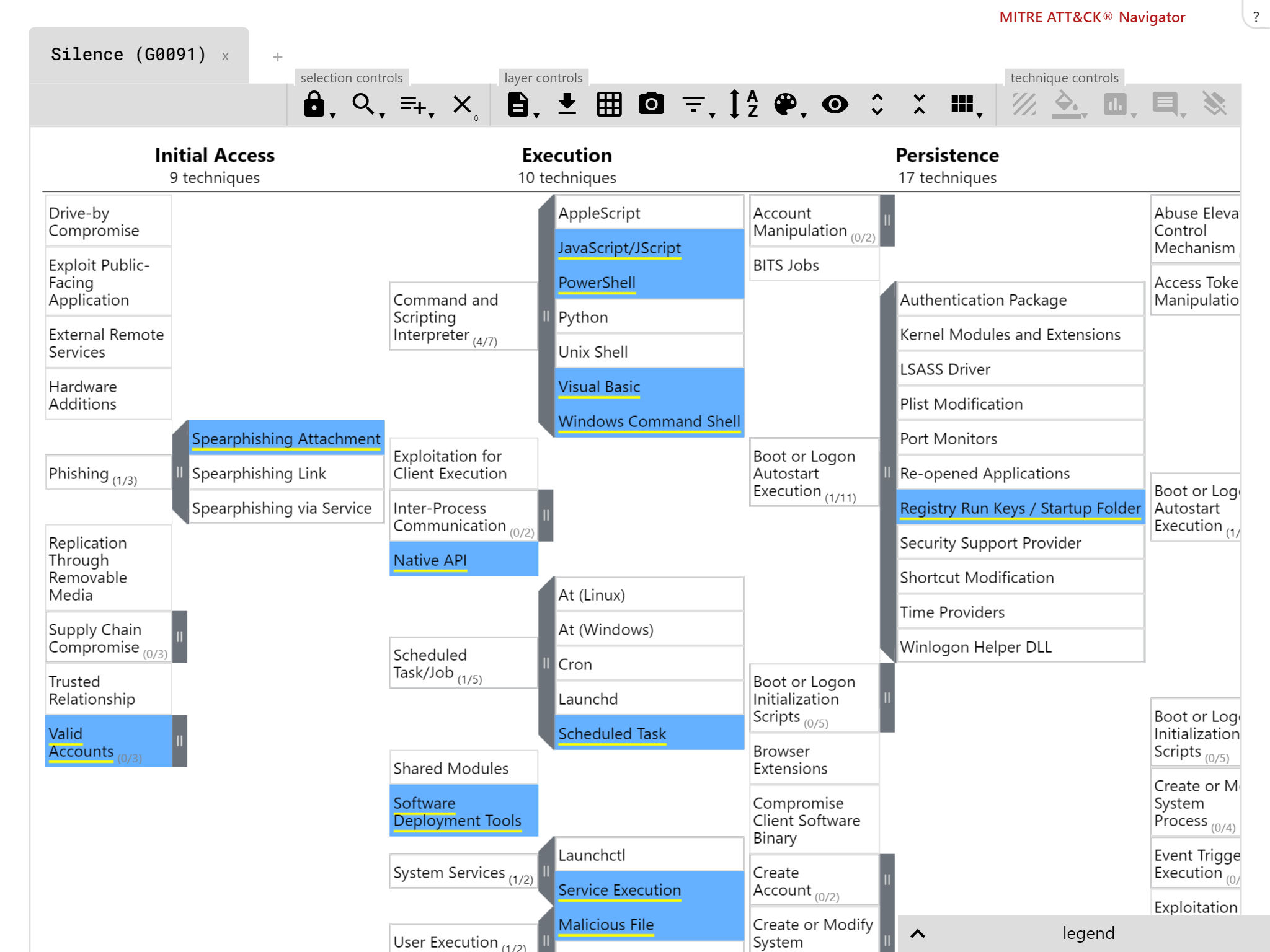

Background: FIN10, Silence

The theme for this report was groups focussed on financial gain. FIN10 was exciting for us as, once again, we saw the use of publicly-available tools in its attacks. Its use of PowerShell makes the group a tricky attacker to deal with. Silence added yet another initial file format to our test set, as the group used .LNK files alongside the usual macro-based attacks.

A public report featuring this series, where we tested SentinelOne in Protection mode, can be found here.

BRTS3: Attack groups focussed on targeting the energy sector

The APT groups included in this Breach Response Threat Series included Dragonfly/ Dragonfly 2.0, APT19/ Deep Panda and APT34 (Oilrig).

While these threat actors have not targeted the energy sector exclusively, we chose them as they have attacked energy sectors across the world previously. We were very excited to work with our clients on these attacks and were not expecting to publish our work on these group until 2021, as we are working on developing these test attacks in the last few months of 2020.

When is too much not enough?

With all of these groups now set up, we are improving our methodology for this series of tests. We have been running this kind of work for a few years and we are ready to expand the scope of the testing. Alert fatigue is something that we have seen arise as an issue when it comes to some EDR-focused products.

Providing too much information can reduce a product’s value.

We would like to reward products that make SOC analysts’ jobs easier. Products that tell the story of the attack efficiently, without overwhelming their users with alerts, can make the difference between catching an attacker before they get massive foothold in your environment and discovering a problem much after the fact, if at all.

We are also re-examining false positive (FP) testing for the products enrolled in these tests. This goes hand-in-hand with alert fatigue. Replicating a real-world environment for these products is something that requires a lot thought. Before we move to a more complex FP approach we need to experiment some more.

Expect an update to our methodology in the first few months of 2021. We will also launch a new online portal that adds transparency to our testing and educates readers of our reports on how they can apply products in their own environment most effectively.

All posts

All posts